AI-generated music is everywhere; is any of it legal?

James Grimmelmann, professor of digital and information law at Cornell Tech and Cornell Law School, sees parallels with the legal cases from the ’90s settling the use of sampling from other records. The rulings shook hip-hop producers, who had sampled liberally from older records without getting clearance. (Image from Shutterstock)

Musician Dustin Ballard has turned a spare room in his Dallas home into a soundproof studio. Inside are relics from another era: a ukulele, a fiddle, a smattering of 45s and an Edison phonograph. But when he sits down at his PC to make music, he’s immersed in the future.

It’s from this studio that Ballard, a copywriter and creative director, makes artificial intelligence-generated mash-ups for his popular YouTube channel, There I Ruined It, a genre-bending experiment he started when his western swing band was sidelined during the pandemic.

“At first, it was more about taking the video of a live performance or music video and then swapping out the sound. It might be Lady Gaga, but it sounds like polka music. Then it evolved into different things—mash-ups, AI and auto-tuning—and just a variety of ways to ruin music,” he says.

Ballard performs all the instruments on his tracks but uses an AI tool to transform his vocals into those of whatever artist he is spoofing. His efforts have caught the attention of the celebrities who inspired his songs. In May, an incredulous Snoop Dogg could be found on Instagram singing along to Ballard’s AI-generated mash-up of the rapper’s track “Gin and Juice” with the Jungle Book song “The Bare Necessities.”

There has been an explosion of AI-generated music featuring the living or resurrecting the dead—including David Bowie, Kurt Cobain and Jim Morrison, whose AI incarnations can now be found performing covers of songs by Radiohead, Oasis and Lana Del Rey, respectively.

“Heart on My Sleeve,” a track featuring AI-generated vocals from Drake and the Weeknd was a streaming smash last year after it went viral on YouTube and Spotify. Even famous artists have got in on the action. French DJ and music producer David Guetta caused a stir when he created a song with AI Eminem vocals.

But as artists like Ballard push the limits of parody, fair use, right of publicity, infringement and authorship, there is one overarching question: Is any of this stuff legal?

Fair use?

It’s not just amateur musicians like Ballard living under a cloud of uncertainty. Record companies, artists and publishers are having to come to terms with the legal and creative conundrums AI presents.

In October, Universal Music Group and other music publishers filed a lawsuit against the artificial intelligence research company Anthropic for infringement, claiming its large language model, Claude, was trained without permission on copyrighted song lyrics.

Los Angeles intellectual property lawyer Howard E. King, managing partner of the Los Angeles law firm King, Holmes, Paterno & Soriano, represented Pharrell Williams and Robin Thicke in their industry-shifting case over the song “Blurred Lines,” which Marvin Gaye’s family said lifted elements from his song “Got to Give It Up.”

The way King sees it, the biggest unresolved legal issue is whether or not artists, record labels and publishers can claim infringement as AI learns from copyrighted works, even if the output bears little relation to the original compositions or lyrics.

“That’s the $64 question,” King says.

There’s little doubt in the mind of Jessica Richard, senior vice president of federal public policy for the Recording Industry Association of America, that by the time AI outputs content, the infringement has already happened. The trade group is part of the Human Artistry Campaign, which was formed last year to protect artists’ rights amid the rise of AI.

“You’d have a separate analysis for copyright infringement on the output depending on the degree of similarity of the output to the input,” Richards explains. “But the infringement on the input would have already taken place.”

Others aren’t convinced. Emory University law professor Matthew Sag, an expert in intellectual property and copyright law, argues large language models do not so much copy work as predict the next line in a sequence.

“This is a process of deriving abstractions from the data. Those abstractions are not meant to be copyrightable,” he says. “My view is that as long as the outputs don’t look too much like inputs, then this is fair use under U.S. law.”

Fair use is a part of copyright law that allows the limited use of copyrighted material for commentary and criticism, parody and even research.

In a rebuttal filed Tuesday to the music publishers’ motion for a preliminary injunction, Anthropic said it was “confident that using copyrighted content as training data for a [large language model] is a fair use under the law—meaning that it is not infringement at all.” The filing argues that Claude is not designed to output copyrighted song lyrics, and if the AI did so, it must have been a bug.

There are precedents that Anthropic could fall back on, including a lawsuit against Google, Sag notes. Authors sued the tech company in a New York court over the use of millions of books Google scanned for use in its online index of full-text books. But in 2013, a federal district judge ruled that the snippets of text were “transformative” under copyright law and fair use. The New York City-based 2nd Circuit affirmed that decision in 2015.

The plaintiffs suing Anthropic could have an open-and-shut case if they could show that the AI is reproducing song lyrics in their entirety. But Sag thinks tech companies may already be adding filters to prevent that from happening.

In any case, he believes the “big payday” for music publishers and record companies would come from getting the court to agree that the underlying works the AI is trained on create “fruits from a poisonous tree,” even when the tech outputs original content.

“That gives them a basis to demand licensing fees in the future,” Sag says.

James Grimmelmann, professor of digital and information law at Cornell Tech and Cornell Law School, sees parallels with the legal cases from the ’90s settling the use of sampling from other records. The rulings shook hip-hop producers, who had sampled liberally from older records without getting clearance.

“I think if you were using AI to add specific elements of specific songs, that would look a lot like the legality of sampling,” he says. “If you’re going to use it commercially, you probably need a sampling license from the sound recording copyright owner.”

As for mash-ups, not all of them are parodies. For decades, music producers have combined elements of other songs to create new music.

Even if creators like Ballard do claim fair use, protection is not a given. In 2023, the U.S. Supreme Court ruled Andy Warhol infringed on the copyright of photographer Lynn Goldsmith when he used an image of Prince that Goldsmith shot in 1981 for several silk screen prints.

The court’s decision narrows the definition of what counts as “transformative” under copyright law and makes it harder to claim a work is a parody, Grimmelmann says.

“Parodies are not automatically fair use. Transformative use is still only one part. Other facts, such as the commerciality of the use, the amount of the work used, and the effect on the market could all cut against the mash-up,” Grimmelmann says.

The imitation game

So far, the AI-generated mash-ups on the internet rely on mimicking an artist’s voice. And as the debate over what is or isn’t copyrightable rages, artists may feel tempted to avoid the issue altogether by leaning on a right of publicity claim, which gives them legal rights if their name, likeness or identity is used without their consent.

There is no federal right of publicity law, although AI has led to calls for legislation to change that. A majority of states either have some kind of right of publicity statute, protect it under common law, or do both.

Grimmelmann says the right of publicity in some states is perpetual. But in most, it can last anywhere from an artist’s lifetime to 20 to 100 years after their death. In other states, there is no posthumous right of publicity.

But AI has opened up yet another gray area. For example, what will happen if 200 years into the future, producers are still imitating dead artists to create new music?

“We haven’t tested the limits of that principle,” Grimmelmann says. “I don’t know if these indeterminately long rights of publicity will stand up. But officially, that’s what some states do.”

Blurry lines

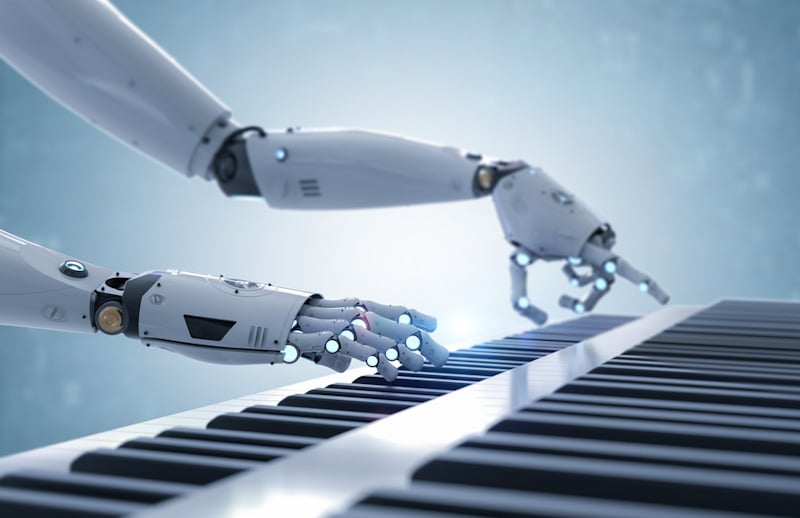

Then there is a lingering question about whether artists and producers will ever be able to claim authorship of AI-generated music.

The U.S. Copyright Office only recognizes works “created by a human being.” Its March 2023 guidance on AI states “users do not exercise ultimate creative control over how [AI] systems interpret prompts and generate material.”

However, the lines are already being drawn. Some artists and experts argue that the process of inputting prompts to determine an output is as much a part of the creative process as, say, a photographer using a camera. According to this line of thinking, AI is just another tool in the musician’s toolbox, and what it produces should be copyrightable.

Grimmelmann says the copyright office’s stance doesn’t seem sustainable.

“There’ll be economic pressure to give some kind of protection. But also, artists will simply lie about the role of the AI when they apply to the copyright office. Before you know it, we’ll have thousands of registrations and no visible way of checking. At some point, there’s going to be too much water under the bridge to declare them all ineligible,” he says.

While the tech raises many unknowns, what seems almost certain is that judges and lawyers will have their hands full as they grapple with a technology set to upend the music industry and the creative process.

Given his experience in the “Blurred Lines” case, King questions the wisdom of putting matters into the hands of jurors, “none of whom know a thing about music.” Throw a new technology into the mix that many people barely understand, and things could get messy.

Still, King is confident that creators like Ballard will avoid any courtroom drama. There simply isn’t enough money to be made going after amateurs on YouTube. The process of taking down offending content is largely automated, he says.

“There’s no profit in a mash-up. You’re limited to statutory damages and maybe attorney’s fees. Unless you want to set a precedent, why would you do it?” King says.

In the meantime, Ballard has no intention of quitting. On his YouTube channel, you can find Eminem singing a bluegrass rendition of his hit “Without Me,” Hank Williams crooning Dr. Dre’s track “Still D.R.E.,” and a duet of Michael Jackson and Nicki Minaj singing over “Summer Nights” from the Grease soundtrack.

Or at least you could before Universal Music Group issued two takedown notices in November. Ballard says he had to make the channel private for 90 days because under YouTube’s three-strikes rule, he faces a permanent ban. He is working with a Los Angeles lawyer to see if he can have his mash-ups covered as parodies.

“But I don’t want to spend my entire waking hours fighting legal issues just for a social media channel that doesn’t stand to make me much money in the first place,” he adds.

King mostly represents artists, and he believes they could take legal action if AI is used to create a hit song that uses elements of their music. That’s where the money is. But in his conversations with executives, he senses they are on alert as they consider whether AI works are infringing.

“We talk to labels all the time, and we know that they have a keen eye on another profit-making opportunity,” he says.